Interpretable Dynamics Models for Data-Efficient Reinforcement Learning

Abstract

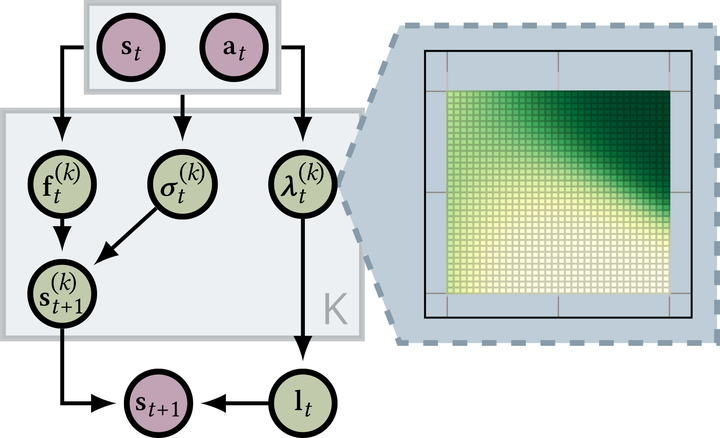

In this paper, we present a Bayesian view on model-based reinforcement learning. We use expert knowledge to impose structure on the transition model and present an efficient learning scheme based on variational inference. This scheme is applied to a heteroskedastic and bimodal benchmark problem on which we compare our results to NFQ and show how our approach yields human-interpretable insight about the underlying dynamics while also increasing data-efficiency.

Type

Publication

ESANN